以3节点为例,存储使用local pv使用最大io性能,所有节点即是master节点也是data节点。完整内容可参考文档:

https://www.cuiliangblog.cn/detail/section/162609409

系统参数调整 修改文件描述符数目 设置环境变量

1 2 3 4 5 # 修改环境变量文件 vim /etc/profile ulimit -n 65535 # 使配置生效 source /etc/profile

修改limits.conf配置文件

1 2 3 4 # 修改limits.conf配置 vim /etc/security/limits.conf * soft nofile 65535 * hard nofile 65535

验证

修改虚拟内存数大小 内核设置可以直接在主机上设置,也可以通过具有特权的初始化容器中设置,通常情况下直接在主机上设置。

临时设置

1 2 # sysctl -w vm.max_map_count=262144 vm.max_map_count = 262144

永久设置

1 2 3 4 5 cat >> /etc/sysctl.conf << EOF vm.max_map_count=262144 EOF # sysctl -p vm.max_map_count = 262144

创建local pv资源 创建StorageClass provisioner 字段定义为 no-provisioner,这是因为 Local Persistent Volume 目前尚不支持 Dynamic Provisioning 动态生成 PV,所以我们需要提前手动创建 PV。 volumeBindingMode 字段定义为 WaitForFirstConsumer,它是 Local Persistent Volume 里一个非常重要的特性,即:延迟绑定。延迟绑定就是在我们提交 PVC 文件时,StorageClass 为我们延迟绑定 PV 与 PVC 的对应关系。

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@tiaoban eck]# cat > storageClass.yaml << EOF apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: local-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer EOF [root@tiaoban eck]# kubectl apply -f storageClass.yaml storageclass.storage.k8s.io/local-storage created [root@tiaoban eck]# kubectl get storageclass NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE local-storage kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 19s

创建pv pv资源分布如下:

pv名称 主机 路径 容量

es-data-pv0 k8s-test1 /data/es-data 50G

es-data-pv1 k8s-test2 /data/es-data 50G

es-data-pv2 k8s-test3 /data/es-data 50G

我们需要提前在各个节点下创建对应的数据存储目录。

创建pv资源:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 [root@tiaoban eck]# cat > pv.yaml << EOF apiVersion: v1 kind: PersistentVolume metadata: name: es-data-pv0 labels: app: es-pv0 spec: capacity: storage: 50Gi volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain # 删除策略 storageClassName: local-storage # storageClass名称,与前面创建的storageClass保持一致 local: path: /data/es-data # 本地存储路径 nodeAffinity: # 调度至k8s-test1节点 required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s-test1 --- apiVersion: v1 kind: PersistentVolume metadata: name: es-data-pv1 labels: app: es-pv1 spec: capacity: storage: 50Gi volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain # 删除策略 storageClassName: local-storage # storageClass名称,与前面创建的storageClass保持一致 local: path: /data/es-data # 本地存储路径 nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s-test2 --- apiVersion: v1 kind: PersistentVolume metadata: name: es-data-pv2 labels: app: es-pv2 spec: capacity: storage: 50Gi volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain # 删除策略 storageClassName: local-storage # storageClass名称,与前面创建的storageClass保持一致 local: path: /data/es-data # 本地存储路径 nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s-test3 EOF [root@tiaoban eck]# kubectl apply -f pv.yaml persistentvolume/es-data-pv0 created persistentvolume/es-data-pv1 created persistentvolume/es-data-pv2 created [root@tiaoban eck]# kubectl get pv | grep es es-data-pv0 50Gi RWO Retain Available local-storage <unset> 43s es-data-pv1 50Gi RWO Retain Available local-storage <unset> 43s es-data-pv2 50Gi RWO Retain Available local-storage <unset> 43s

创建pvc 创建的时候注意pvc的名字的构成:pvc的名字 = volume_name-statefulset_name-序号,然后通过selector标签选择,强制将pvc与pv绑定。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 [root@tiaoban eck]# cat > pvc.yaml << EOF apiVersion: v1 kind: PersistentVolumeClaim metadata: name: elasticsearch-data-elasticsearch-es-all-0 namespace: elk spec: accessModes: - ReadWriteOnce resources: requests: storage: 50Gi storageClassName: local-storage selector: matchLabels: app: es-pv0 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: elasticsearch-data-elasticsearch-es-all-1 namespace: elk spec: accessModes: - ReadWriteOnce resources: requests: storage: 50Gi storageClassName: local-storage selector: matchLabels: app: es-pv1 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: elasticsearch-data-elasticsearch-es-all-2 namespace: elk spec: accessModes: - ReadWriteOnce resources: requests: storage: 50Gi storageClassName: local-storage selector: matchLabels: app: es-pv2 EOF [root@tiaoban eck]# kubectl create ns elk namespace/elk created [root@tiaoban eck]# kubectl apply -f pvc.yaml persistentvolumeclaim/elasticsearch-data-elasticsearch-es-all-0 created persistentvolumeclaim/elasticsearch-data-elasticsearch-es-all-1 created persistentvolumeclaim/elasticsearch-data-elasticsearch-es-all-2 created [root@tiaoban eck]# kubectl get pvc -n elk NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE elasticsearch-data-elasticsearch-es-all-0 Pending local-storage <unset> 53s elasticsearch-data-elasticsearch-es-all-1 Pending local-storage <unset> 53s elasticsearch-data-elasticsearch-es-all-2 Pending local-storage <unset> 53s

ECK部署 部署CRD资源 1 2 3 4 5 6 7 8 9 10 11 12 [root@tiaoban eck]# wget https://download.elastic.co/downloads/eck/2.14.0/crds.yaml [root@tiaoban eck]# kubectl apply -f crds.yaml customresourcedefinition.apiextensions.k8s.io/agents.agent.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/apmservers.apm.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/beats.beat.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/elasticmapsservers.maps.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/elasticsearchautoscalers.autoscaling.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/elasticsearches.elasticsearch.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/enterprisesearches.enterprisesearch.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/kibanas.kibana.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/logstashes.logstash.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/stackconfigpolicies.stackconfigpolicy.k8s.elastic.co created

部署Operator 1 2 3 4 5 6 7 8 9 10 11 12 13 [root@tiaoban eck]# wget https://download.elastic.co/downloads/eck/2.14.0/operator.yaml [root@tiaoban eck]# kubectl apply -f operator.yaml namespace/elastic-system created serviceaccount/elastic-operator created secret/elastic-webhook-server-cert created configmap/elastic-operator created clusterrole.rbac.authorization.k8s.io/elastic-operator created clusterrole.rbac.authorization.k8s.io/elastic-operator-view created clusterrole.rbac.authorization.k8s.io/elastic-operator-edit created clusterrolebinding.rbac.authorization.k8s.io/elastic-operator created service/elastic-webhook-server created statefulset.apps/elastic-operator created validatingwebhookconfiguration.admissionregistration.k8s.io/elastic-webhook.k8s.elastic.co created

验证 1 2 3 4 5 6 [root@tiaoban eck]# kubectl get pod -n elastic-system NAME READY STATUS RESTARTS AGE elastic-operator-0 1/1 Running 0 2s [root@tiaoban eck]# kubectl get svc -n elastic-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE elastic-webhook-server ClusterIP 10.103.185.159 <none> 443/TCP 5m55s

当看到elastic-operator-0状态为Running时,表示eck已成功部署并运行在k8s集群上。

Elasticsearch部署 创建elasticsearch集群 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 [root@tiaoban eck]# cat > es.yaml << EOF apiVersion: elasticsearch.k8s.elastic.co/v1 kind: Elasticsearch metadata: namespace: elk name: elasticsearch spec: version: 8.15.3 image: harbor.local.com/elk/elasticsearch:8.15.3 # 自定义镜像地址,如果不指定则从elastic官方镜像仓库拉取 nodeSets: - name: all # 节点名称 count: 3 # 节点数量 volumeClaimTemplates: - metadata: name: elasticsearch-data spec: accessModes: - ReadWriteOnce resources: requests: storage: 50Gi # 指定master节点存储容量,与pvc容量保持一致。 storageClassName: local-storage podTemplate: spec: containers: - name: elasticsearch env: - name: ES_JAVA_OPTS # 指定节点JVM大小 value: "-Xms1g -Xmx1g" resources: limits: # 资源限制值,通常为JVM的2倍 cpu: 1 memory: 2Gi requests: # 资源请求值,通常与JVM保持一致 cpu: 500m memory: 1Gi EOF [root@tiaoban eck]# kubectl apply -f es.yaml elasticsearch.elasticsearch.k8s.elastic.co/elasticsearch created

查看并验证资源 1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@tiaoban eck]# kubectl get pod -n elk NAME READY STATUS RESTARTS AGE elasticsearch-es-all-0 1/1 Running 0 3m48s elasticsearch-es-all-1 1/1 Running 0 3m48s elasticsearch-es-all-2 1/1 Running 0 3m48s [root@tiaoban eck]# kubectl get svc -n elk NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE elasticsearch-es-all ClusterIP None <none> 9200/TCP 4m7s elasticsearch-es-http ClusterIP 10.96.196.197 <none> 9200/TCP 4m9s elasticsearch-es-internal-http ClusterIP 10.98.63.89 <none> 9200/TCP 4m9s elasticsearch-es-transport ClusterIP None <none> 9300/TCP 4m9s [root@tiaoban eck]# kubectl get es -n elk NAME HEALTH NODES VERSION PHASE AGE elasticsearch green 3 8.15.3 Ready 4m22s

获取elastic用户密码

1 2 [root@tiaoban eck]# kubectl get secrets -n elk elasticsearch-es-elastic-user -o go-template='{{.data.elastic | base64decode}}' A1i529P3q783xblCSChV8zY1

导出CA证书

1 [root@tiaoban eck]# kubectl -n elk get secret elasticsearch-es-http-certs-public -o go-template='{{index .data "ca.crt" | base64decode }}' > ca.crt

访问验证

1 2 3 4 5 [root@rockylinux /]# curl -k https://elastic:A1i529P3q783xblCSChV8zY1@elasticsearch-es-http.elk.svc:9200/_cat/nodes?v ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name 10.244.0.55 8 71 8 0.65 0.69 0.66 cdfhilmrstw - elasticsearch-es-all-0 10.244.2.32 45 72 9 0.90 0.98 0.82 cdfhilmrstw * elasticsearch-es-all-2 10.244.1.24 25 70 7 1.04 0.91 0.73 cdfhilmrstw - elasticsearch-es-all-1

创建ingress资源 自签证书创建secret资源

1 2 3 4 5 [root@tiaoban eck]# openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=elk-tls" Generating a RSA private key writing new private key to 'tls.key' [root@tiaoban eck]# kubectl create secret tls -n elk elk-tls --cert=tls.crt --key=tls.key secret/elk-tls created

创建IngressRouter规则文件和ServersTransport文件,配置insecureSkipVerify使得traefik代理访问后端服务时跳过证书验证。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 [root@tiaoban eck]# cat > es-ingress.yaml << EOF apiVersion: traefik.io/v1alpha1 kind: ServersTransport metadata: name: elasticsearch-transport namespace: elk spec: serverName: "elasticsearch.local.com" insecureSkipVerify: true --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: elasticsearch namespace: elk spec: entryPoints: - websecure routes: - match: Host(`elasticsearch.local.com`) kind: Rule services: - name: elasticsearch-es-http port: 9200 serversTransport: elasticsearch-transport tls: secretName: elk-tls EOF [root@tiaoban eck]# kubectl apply -f es.yaml serverstransport.traefik.io/elasticsearch-transport created ingressroute.traefik.io/elasticsearch created

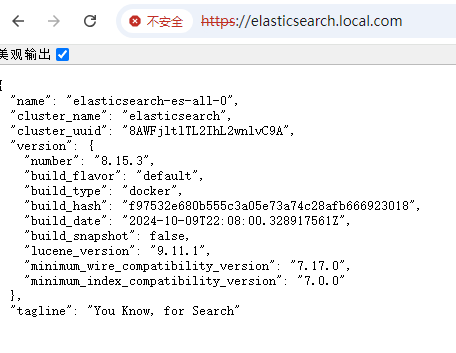

添加hosts后访问验证

Kibana部署 创建kibana资源 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 [root@tiaoban eck]# cat > kibana.yaml << EOF apiVersion: kibana.k8s.elastic.co/v1 kind: Kibana metadata: name: kibana namespace: elk spec: version: 8.15.3 image: harbor.local.com/elk/kibana:8.15.3 count: 1 elasticsearchRef: # 与Elasticsearch资源名称匹配 name: elasticsearch podTemplate: spec: containers: - name: kibana env: - name: NODE_OPTIONS value: "--max-old-space-size=2048" - name: SERVER_PUBLICBASEURL value: "https://kibana.local.com" - name: I18N_LOCALE # 中文配置 value: "zh-CN" resources: requests: memory: 1Gi cpu: 0.5 limits: memory: 2Gi cpu: 2 EOF [root@tiaoban eck]# kubectl apply -f kibana.yaml kibana.kibana.k8s.elastic.co/kibana created

查看验证

1 2 3 4 5 6 7 [root@tiaoban eck]# kubectl get pod -n elk | grep kibana kibana-kb-6698c6c45d-r7jj6 1/1 Running 0 3m39s [root@tiaoban eck]# kubectl get svc -n elk | grep kibana kibana-kb-http ClusterIP 10.105.217.119 <none> 5601/TCP 3m43s [root@tiaoban eck]# kubectl get kibana -n elk NAME HEALTH NODES VERSION AGE kibana green 1 8.15.3 3m50s

创建Ingress资源 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 [root@tiaoban eck]# cat > kibana-ingress.yaml << EOF apiVersion: traefik.io/v1alpha1 kind: ServersTransport metadata: name: kibana-transport namespace: elk spec: serverName: "kibana.local.com" insecureSkipVerify: true --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: kibana namespace: elk spec: entryPoints: - websecure routes: - match: Host(`kibana.local.com`) kind: Rule services: - name: kibana-kb-http port: 5601 serversTransport: kibana-transport tls: secretName: elk-tls EOF [root@tiaoban eck]# kubectl apply -f kibana.yaml serverstransport.traefik.io/kibana-transport created ingressroute.traefik.io/kibana created

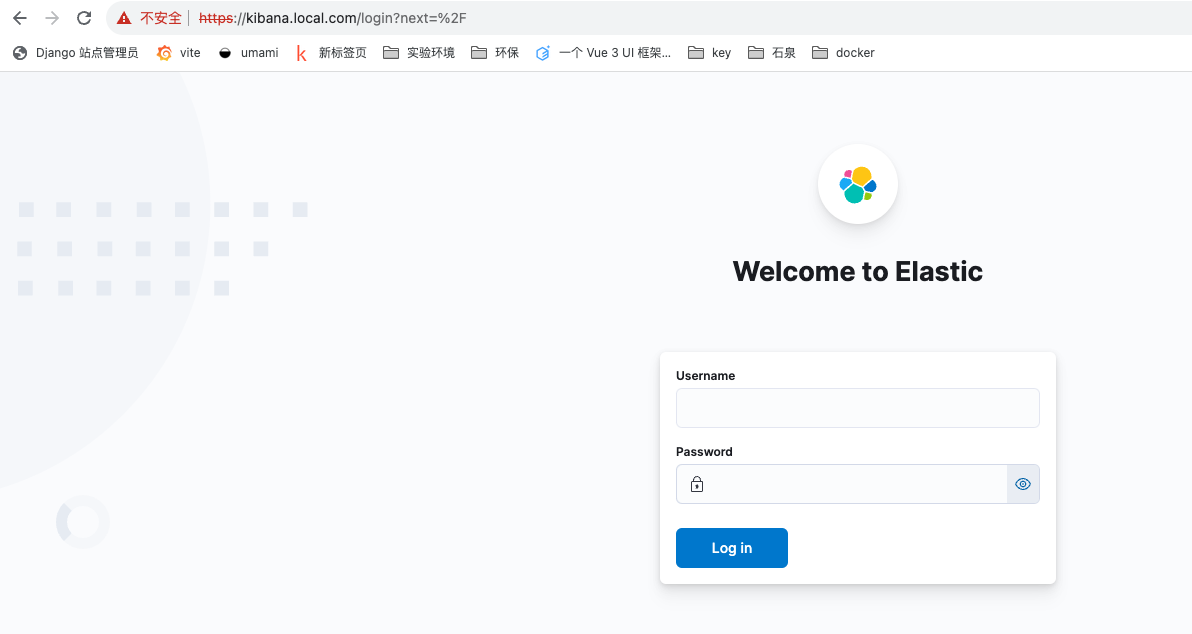

访问验证 客户端添加hosts记录后访问kibana测试