生产环境推荐的kafka部署方式为operator方式部署,Strimzi是目前最主流的operator方案。集群数据量较小的话,可以采用NFS共享存储,数据量较大的话使用local pv存储。

部署operator helm部署operator operator部署方式为helm或yaml文件部署,此处以helm方式部署为例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 [root@tiaoban kafka]# helm repo add strimzi https://strimzi.io/charts/ "strimzi" has been added to your repositories [root@tiaoban kafka]# helm pull strimzi/strimzi-kafka-operator --untar [root@tiaoban kafka]# cd strimzi-kafka-operator [root@tiaoban strimzi-kafka-operator]# vim values.yaml dashboards: # 加载grafna dashboard enabled: true namespace: monitoring # 安装 [root@k8s-master traefik]# helm install strimzi -n kafka . -f values.yaml --create-namespace NAME: strimzi LAST DEPLOYED: Sun Oct 20 20:21:54 2024 NAMESPACE: kafka STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: Thank you for installing strimzi-kafka-operator-0.43.0 To create a Kafka cluster refer to the following documentation. https://strimzi.io/docs/operators/latest/deploying.html#deploying-cluster-operator-helm-chart-str [root@tiaoban strimzi-kafka-operator]# kubectl get pod -n kafka NAME READY STATUS RESTARTS AGE strimzi-cluster-operator-56fdbb99cb-gznkw 1/1 Running 0 17m

获取示例文件 Strimzi官方仓库为我们提供了各种场景下的示例文件,资源清单下载地址: https://github.com/strimzi/strimzi-kafka-operator/releases

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@tiaoban kafka]# ls strimzi-kafka-operator [root@tiaoban kafka]# wget https://github.com/strimzi/strimzi-kafka-operator/releases/download/0.43.0/strimzi-0.43.0.tar.gz [root@tiaoban kafka]# tar -zxf strimzi-0.43.0.tar.gz [root@tiaoban kafka]# cd strimzi-0.43.0/examples/kafka [root@tiaoban kafka]# tree. ├── kafka-ephemeral-single.yaml ├── kafka-ephemeral.yaml ├── kafka-jbod.yaml ├── kafka-persistent-single.yaml ├── kafka-persistent.yaml ├── kafka-with-node-pools.yaml └── kraft ├── kafka-ephemeral.yaml ├── kafka-jbod.yaml ├── kafka-single-node.yaml ├── kafka-with-dual-role-nodes.yaml ├── kafka.yaml └── README.md

示例文件说明:

kafka-persistent.yaml:部署具有三个 ZooKeeper 和三个 Kafka 节点的持久集群。(推荐) kafka-jbod.yaml:部署具有三个 ZooKeeper 和三个 Kafka 节点(每个节点使用多个持久卷)的持久集群。 kafka-persistent-single.yaml:部署具有单个 ZooKeeper 节点和单个 Kafka 节点的持久集群。 kafka-ephemeral.yaml:部署具有三个 ZooKeeper 和三个 Kafka 节点的临时群集。 kafka-ephemeral-single.yaml:部署具有三个 ZooKeeper 节点和一个 Kafka 节点的临时群集。

kraft模式文件说明:

kafka-with-dual-role-nodes.yaml:部署一个 Kafka 集群,其中包含一个共享代理和控制器角色的节点池。(推荐) kafka.yaml:部署具有一个控制器节点池和一个代理节点池的持久性 Kafka 集群。 kafka-ephemeral.yaml:部署一个临时 Kafka 集群,其中包含一个控制器节点池和一个代理节点池。 kafka-single-node.yaml:部署具有单个节点的 Kafka 集群。 kafka-jbod.yaml:在每个代理节点中部署具有多个卷的 Kafka 集群。

Zookeeper模式部署 创建pvc资源 此处以nfs存储为例,提前创建pvc资源,分别用于3个zookeeper和3个kafka持久化存储数据使用。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 [root@tiaoban kafka]# cat kafka-pvc.yaml kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-my-cluster-zookeeper-0 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 100Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-my-cluster-zookeeper-1 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 100Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-my-cluster-zookeeper-2 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 100Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-0-my-cluster-kafka-0 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 100Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-0-my-cluster-kafka-1 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 100Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-0-my-cluster-kafka-2 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 100Gi

部署kafka和zookeeper 参考官方仓库的kafka-persistent.yaml示例文件,部署三个 ZooKeeper 和三个 Kafka 节点的持久集群。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 [root@tiaoban kafka]# cat > kafka.yaml << EOF apiVersion: kafka.strimzi.io/v1beta2 kind: Kafka metadata: name: my-cluster namespace: kafka spec: kafka: version: 3.5.1 replicas: 3 listeners: - name: plain port: 9092 type: internal tls: false - name: tls port: 9093 type: internal tls: true config: offsets.topic.replication.factor: 3 transaction.state.log.replication.factor: 3 transaction.state.log.min.isr: 2 default.replication.factor: 3 min.insync.replicas: 2 inter.broker.protocol.version: "3.5" storage: type: jbod volumes: - id: 0 type: persistent-claim size: 100Gi deleteClaim: false zookeeper: replicas: 3 storage: type: persistent-claim size: 100Gi deleteClaim: false entityOperator: topicOperator: {} userOperator: {} EOF

访问验证 查看资源信息,已成功创建相关pod和svc资源。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 [root@tiaoban kafka]# kubectl get pod -n kafka NAME READY STATUS RESTARTS AGE my-cluster-entity-operator-7c68d4b9d9-tg56j 3/3 Running 0 2m15s my-cluster-kafka-0 1/1 Running 0 2m54s my-cluster-kafka-1 1/1 Running 0 2m54s my-cluster-kafka-2 1/1 Running 0 2m54s my-cluster-zookeeper-0 1/1 Running 0 3m19s my-cluster-zookeeper-1 1/1 Running 0 3m19s my-cluster-zookeeper-2 1/1 Running 0 3m19s strimzi-cluster-operator-56fdbb99cb-gznkw 1/1 Running 0 97m [root@tiaoban kafka]# kubectl get svc -n kafka NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE my-cluster-kafka-bootstrap ClusterIP 10.99.246.133 <none> 9091/TCP,9092/TCP,9093/TCP 3m3s my-cluster-kafka-brokers ClusterIP None <none> 9090/TCP,9091/TCP,8443/TCP,9092/TCP,9093/TCP 3m3s my-cluster-zookeeper-client ClusterIP 10.109.106.29 <none> 2181/TCP 3m28s my-cluster-zookeeper-nodes ClusterIP None <none> 2181/TCP,2888/TCP,3888/TCP 3m28s

KRaft模式部署 创建pvc资源 此处以nfs存储为例,提前创建pvc资源,用于kafka持久化存储数据使用。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 [root@tiaoban kafka]# cat > kafka-pvc.yaml << EOF kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-0-my-cluster-dual-role-0 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 10Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-0-my-cluster-dual-role-1 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 10Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: data-0-my-cluster-dual-role-2 namespace: kafka spec: storageClassName: nfs-client accessModes: - ReadWriteOnce resources: requests: storage: 10Gi EOF

部署kafka 参考官方仓库的kafka-with-dual-role-nodes.yaml示例文件,部署一个控制代理节点和三个 Kafka 节点的持久集群。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 [root@tiaoban kafka]# cat > kafka.yaml << EOF apiVersion: kafka.strimzi.io/v1beta2 kind: KafkaNodePool metadata: name: dual-role namespace: kafka labels: strimzi.io/cluster: my-cluster spec: replicas: 3 roles: - controller - broker storage: type: jbod volumes: - id: 0 type: persistent-claim size: 10Gi deleteClaim: false kraftMetadata: shared --- apiVersion: kafka.strimzi.io/v1beta2 kind: Kafka metadata: name: my-cluster namespace: kafka annotations: strimzi.io/node-pools: enabled strimzi.io/kraft: enabled spec: kafka: version: 3.8.0 metadataVersion: 3.8-IV0 listeners: - name: plain port: 9092 type: internal tls: false - name: tls port: 9093 type: internal tls: true config: offsets.topic.replication.factor: 3 transaction.state.log.replication.factor: 3 transaction.state.log.min.isr: 2 default.replication.factor: 3 min.insync.replicas: 2 entityOperator: topicOperator: {} userOperator: {} kafkaExporter: {} # 启用exporter监控 EOF

访问验证 查看资源信息,已成功创建相关pod和svc资源。

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@tiaoban kafka]# kubectl get pod -n kafka NAME READY STATUS RESTARTS AGE my-cluster-entity-operator-7c68d4b9d9-tg56j 3/3 Running 0 2m15s NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-cluster-dual-role-0 1/1 Running 0 10m 10.244.3.65 k8s-test4 <none> <none> my-cluster-dual-role-1 1/1 Running 0 10m 10.244.1.33 k8s-test2 <none> <none> my-cluster-dual-role-2 1/1 Running 0 10m 10.244.2.46 k8s-test3 <none> <none> my-cluster-entity-operator-5dc6767689-jmnck 2/2 Running 0 9m26s 10.244.3.66 k8s-test4 <none> <none> strimzi-cluster-operator-7fb8ff4bd-fzfgw 1/1 Running 0 39m 10.244.3.64 k8s-test4 <none> <none> [root@tiaoban kafka]# kubectl get svc -n kafka NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE my-cluster-kafka-bootstrap ClusterIP 10.107.111.213 <none> 9091/TCP,9092/TCP,9093/TCP 21m my-cluster-kafka-brokers ClusterIP None <none> 9090/TCP,9091/TCP,8443/TCP,9092/TCP,9093/TCP 21m

部署kafka-ui 创建资源 创建configmap和ingress资源,在configmap中指定kafka连接地址。以traefik为例,创建ingress资源便于通过域名方式访问。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 [root@tiaoban kafka]# cat kafka-ui.yaml apiVersion: v1 kind: ConfigMap metadata: name: kafka-ui-helm-values namespace: kafka data: KAFKA_CLUSTERS_0_NAME: "kafka-cluster" KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: "my-cluster-kafka-brokers.kafka.svc:9092" AUTH_TYPE: "DISABLED" MANAGEMENT_HEALTH_LDAP_ENABLED: "FALSE" --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: kafka-ui namespace: kafka spec: entryPoints: - web routes: - match: Host(`kafka-ui.local.com`) kind: Rule services: - name: kafka-ui port: 80 [root@tiaoban kafka]# kubectl apply -f kafka-ui.yaml configmap/kafka-ui-helm-values created ingressroute.traefik.containo.us/kafka-ui created

部署kafka-ui helm方式部署kafka-ui并指定配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@tiaoban kafka]# helm repo add kafka-ui https://provectus.github.io/kafka-ui-charts [root@tiaoban kafka]# helm install kafka-ui kafka-ui/kafka-ui -n kafka --set existingConfigMap="kafka-ui-helm-values" NAME: kafka-ui LAST DEPLOYED: Mon Oct 9 09:56:45 2023 NAMESPACE: kafka STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: 1. Get the application URL by running these commands: export POD_NAME=$(kubectl get pods --namespace kafka -l "app.kubernetes.io/name=kafka-ui,app.kubernetes.io/instance=kafka-ui" -o jsonpath="{.items[0].metadata.name}") echo "Visit http://127.0.0.1:8080 to use your application" kubectl --namespace kafka port-forward $POD_NAME 8080:8080

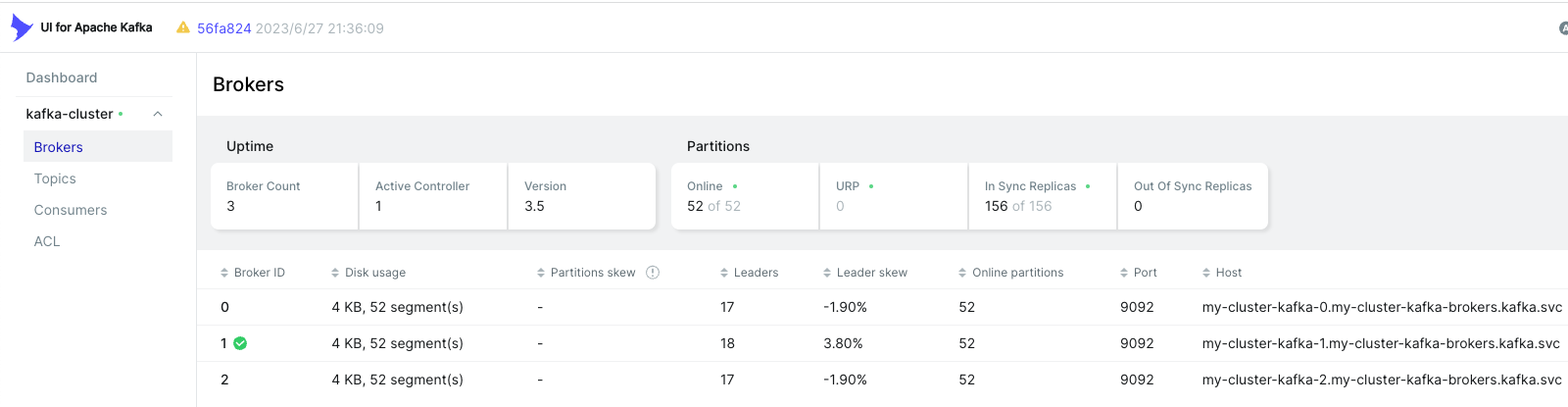

访问验证,添加hosts记录 <font style="background-color:rgba(255, 255, 255, 0);">192.168.10.100 kafka-ui.local.com</font>,然后访问测试。